Influential NLP Papers on Google Scholar

September 05, 2020

Natural language processing (NLP) is a complex and evolving field. Part computer science, part linguistics, part statistics—it can be a challenge deciding where to begin. Books and online courses are a great place to start, and project-based learning is always a good idea, but at some point it becomes necessary to dig deeper, and that means looking at the academic literature.

Reading academic literature is an art unto itself, and just because a paper is popular doesn’t mean it’s the right place for a beginner. However, there is something to be said for papers that have withstood both the test of time and been widely accepted by experts. If a paper has been consistently cited in academic literature, then it’s probably fair to say that the paper is influential.

There are a variety of sources to find academic papers online, but one of the best is Google Scholar (GS), which helpfully provides citation data. We’re going to use this as our measure of influence. Unfortunately, GS doesn’t provide an API or other easy way to programmatically access data, so we manually downloaded the first 1,000 search results for the term natural language processing and then parsed and analyzed the data.

Data Exploration

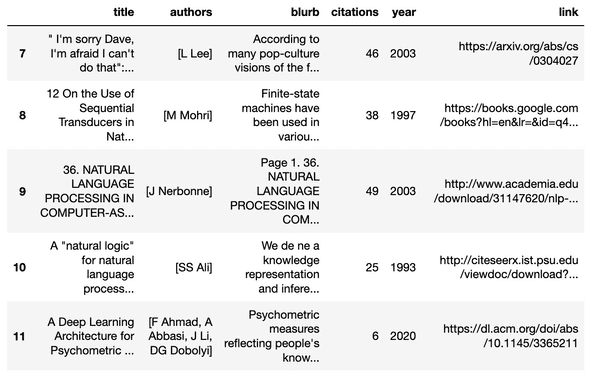

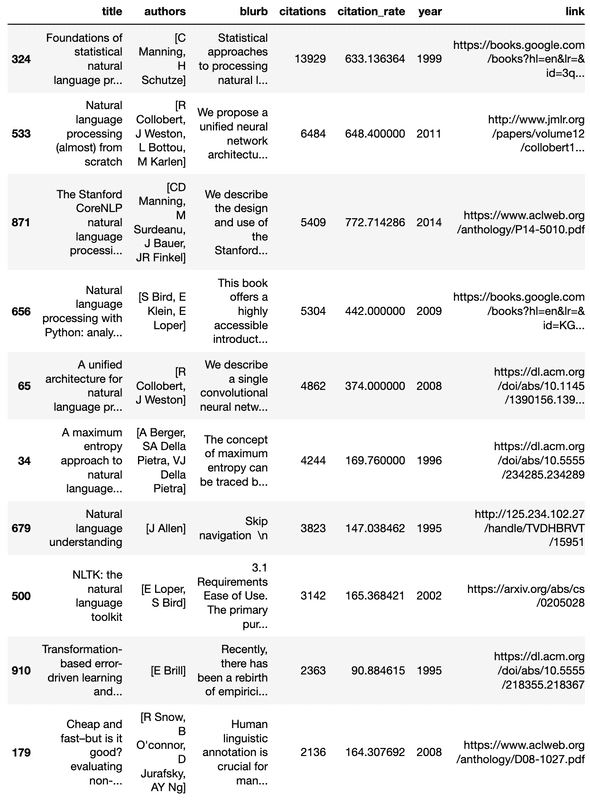

Before we get started, let’s take a look at our data and see what we have to work with. In total, there are 973 papers in our dataset (after cleaning rows with missing data). For each row we have columns for the paper title, authors, blurb, citations, year, and link.

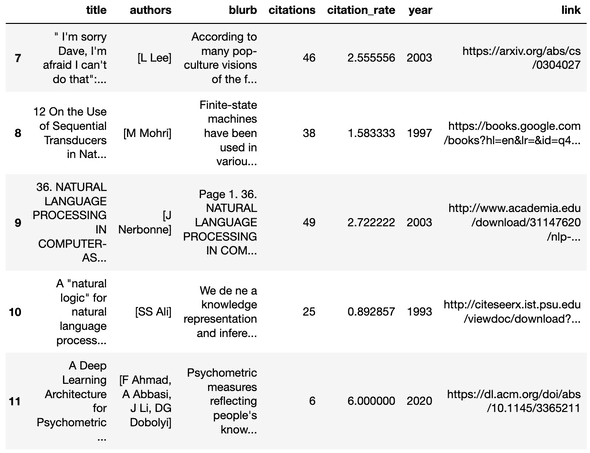

We have a lot of information to work with here, but unfortunately we don’t have the full abstracts or the full paper text. That will have to wait for a future project. Still, we can do a lot with the citation data alone. But is citation data the right metric? Papers that were published a long time ago have an advantage because they have had more time to gather citations. Let’s add a citation_rate column to show how many citations a given paper has received per year since publication.

Great! Now we have at least two metrics to use when judging how influential a particular paper has been in the world of NLP: total citations; and, citation rate since year of publication.

NLP Research Over Time

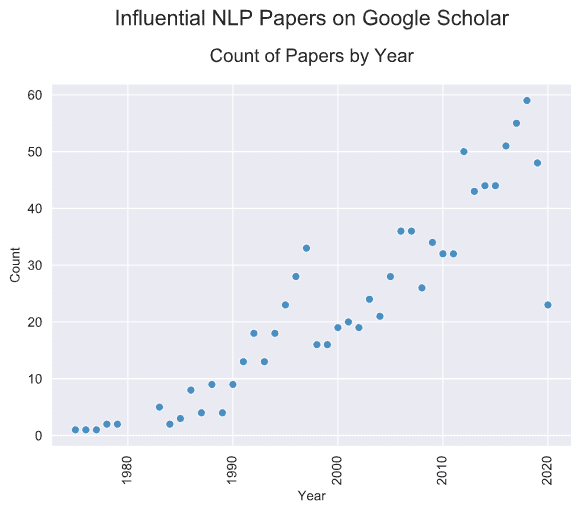

Before we start exploring individual papers it would be useful to get a high-level view of our data. When were the most influential NLP papers produced? How has that trend changed over time? Let’s plot the production of NLP papers by year and see how things look.

NLP papers are definitely proliferating over time! Our data only represents a small snapshot of all NLP papers, but even here we can see the number of papers per year trending upwards since the mid-1970s.

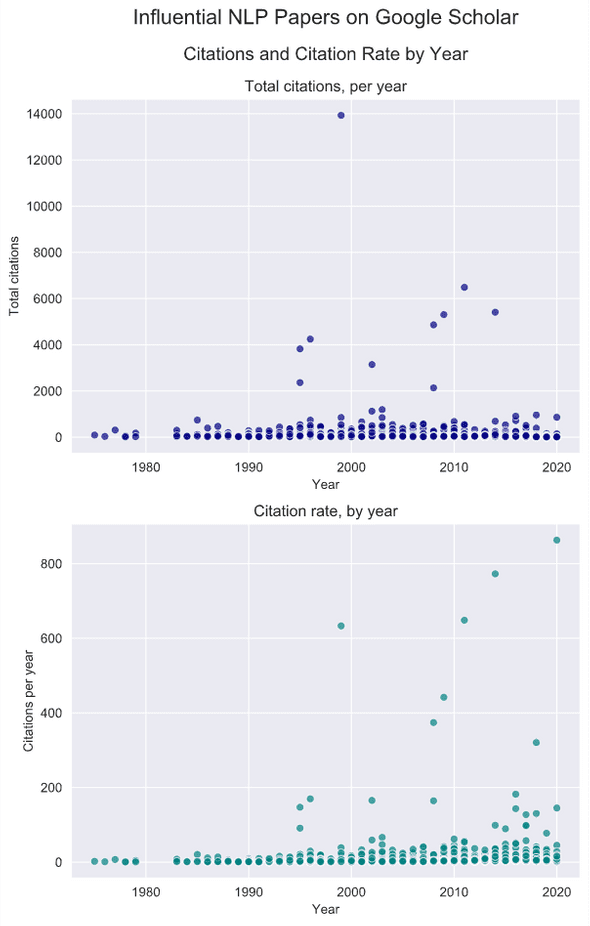

We need to be cautious here though, just because more papers are being produced doesn’t necessarily tell us about where the influential periods of production occur. It does tell us that NLP is growing in popularity, which is in itself an interesting trend. Perhaps we can get a better idea of the influence aspect by looking at citation counts and citation rates over time.

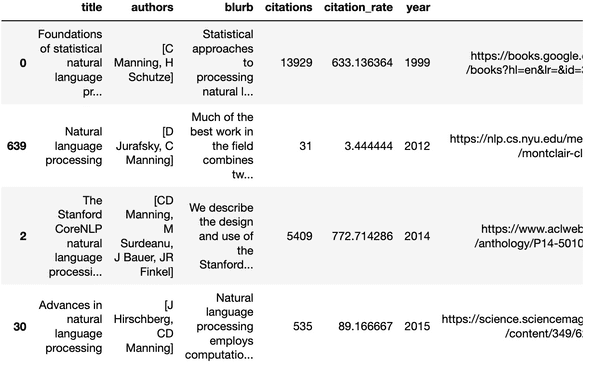

Number of citations per year and citation rates per year look reasonably steady, but there are some interesting outliers. What happened in 1999? It looks like that was a banner year for NLP. Perhaps we will find an answer as we continue to look at the data.

Influential Papers

Now that we have an idea of the broader trend in production of NLP papers, let’s get to our key question. What are the most influential papers and books? What should you read if you want to learn about NLP?

An obvious place to start is to look at which papers have the most citations. Generally, if a paper is widely cited in academic literature, we can reasonably say that it has been an influential paper. Let’s take a look at the top 10 most cited papers.

The clear leader in the citation count is Foundations of Statistical Natural Language Processing (FSNLP) by C Manning and H Schutze, which has 13,929 citations—more than double the next contender. FSNLP was published in 1999, which would appear to solve the mystery outlier from the high-level look we took at the data earlier. If we’re using citation count as our metric for influence, this data would imply that the following are the five most influential NLP papers:

- Foundations of Statistical Natural Language Processing by C Manning and H Schutze, with 13,929 citations;

- Natural Language Processing (almost) from Scratch by R Collobert, J Weston, L Bottou, and M Karlen, with 6,484 citations;

- The Stanford CoreNLP Natural Language Processing Toolkit, by CD Manning, M Surdeanu, J Bauer, and JR Finkel, with 5,409 citations;

- Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit, by S Bird, E Klein, and E Loper, with 5,304 citations; and,

- A Unified Architecture for Natural Language Processing: Deep Neural Networks with Multitask Learning, by R Collobert and J Weston, with 4,862 citations.

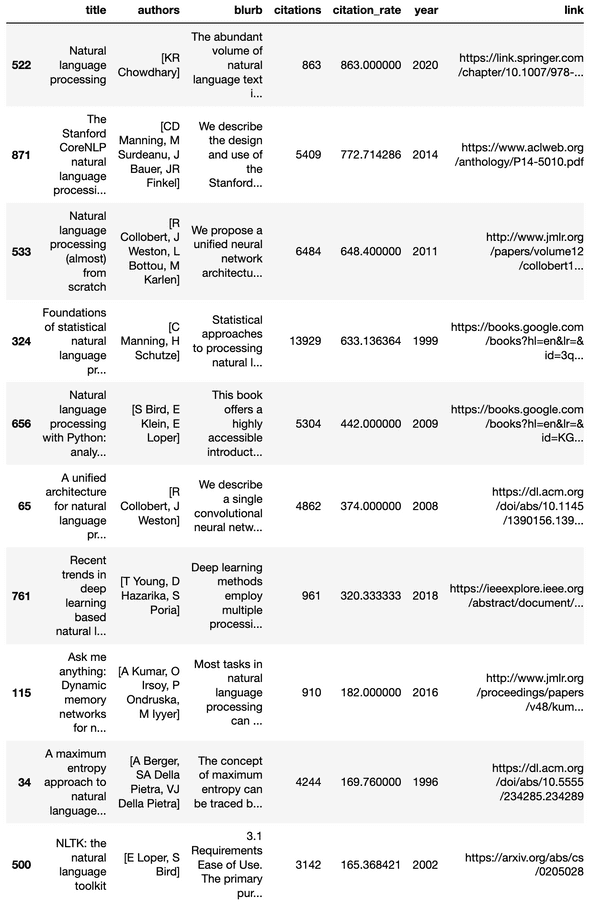

As we noted earlier though, older papers have an advantage over newer papers because they have had more time to generate citations. Let’s look at our other metric, the yearly citation rate, to see if we get different results.

Our top five papers by citation rate are almost the same as the top papers by total citations, with a slight re-ordering. But, we have a new entrant to the mix, and moreover, a new winner! Natural language processing by KR Chowdhary has the highest citation rate with 863 citations per year. Moreover, the paper was published in 2020, which means the year isn’t even up!

Looking at the blurb doesn’t tell us much about why this paper has been so popular, but if we follow the link to the full abstract we see that the paper is actually a chapter from KR Chowdhary’s book Fundamentals of Artificial Intelligence. Perhaps this tells us something about the trend of NLP in general as we move from linguistic analysis to artificial intelligence applications. KR Chowdhary is a professor of computer science at Jodhpur Institute of Engineering & Technology, and based on our data, it would seem that he is one of the most influential figures in NLP and AI today.

Speaking of influential individuals, that seems like a good next step in our exploration.

Influential Authors

One of the great things about NLP is that it benefits from the expertise of all sorts of people, ranging from computer scientists, to linguists, to statisticians, and more.

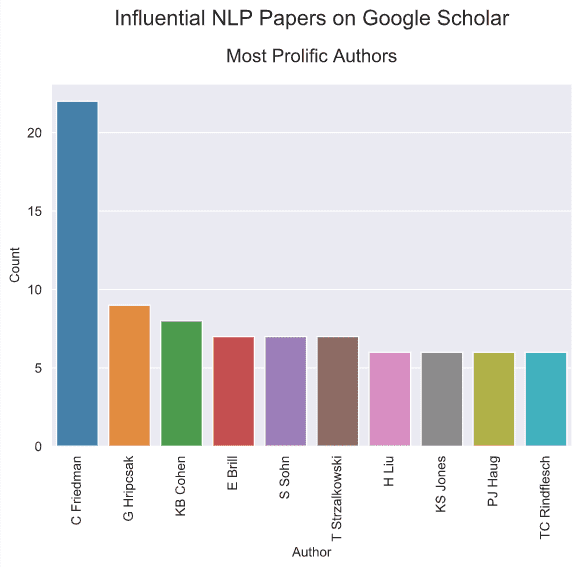

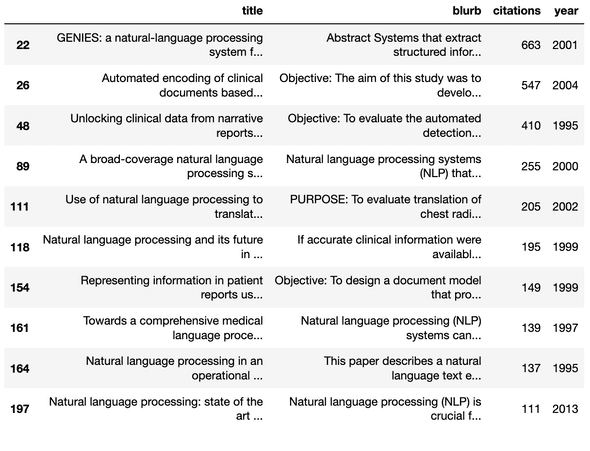

To understand who the most influential authors are, let’s start by looking at who is the most prolific. While we’re at it, let’s see how many unique authors were involved in writing our 973 influential papers.

It looks like 1,937 authors contributed to our 973 papers, which makes sense since most papers have multiple authors. The most prolific author, with 22 papers, is C Friedman. So is C Friedman the most influential author? Maybe the paper titles will give us a clue.

It looks like most of C Friedman’s papers are related to medical issues and bioinformatics. Unfortunately, Google Scholar doesn’t tell us much about who the authors are, but a quick Internet search shows that C Friedman is Professor Carol Friedman who is a professor of biomedical informatics at Columbia University. And with so many papers to her name (several of which have hundreds of citations), she sure looks like an influential author! If you’re interested in biomedical informatics, then she sounds like a good author to start with.

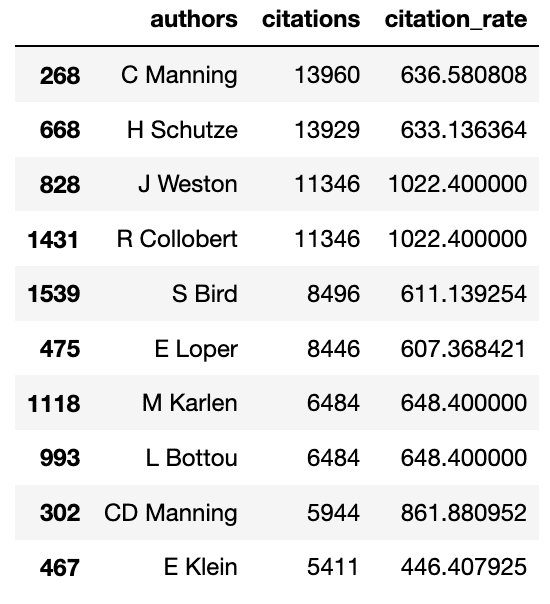

Of course, quantity of papers alone isn’t the sole indicator of influence. What happens if we look at a different measure? Rather than evaluate total papers, let’s see which author has the most citations.

The author with the most citations is C Manning with a staggering 13,960 citations in our data. (You may recognize the name from our earlier look at most cited papers.) As we look at the top 10 authors by total citations, an interesting conundrum appears. C Manning is the top author, but another top author is CD Manning. Are they the same person? Let’s look at the papers.

Once again, we’re going to have to do a bit of research to determine whether C Manning and CD Manning are the same person. Now that we have the titles of the papers, a quick web search should reveal the information we need. And indeed, we can see that all four papers are co-authored by Professor Christopher D. Manning, who is a professor of machine learning, linguistics, and computer science at Stanford University and the Director of the Stanford Artificial Intelligence Laboratory. Prof. Manning is also the author of Introduction to Information Retrieval, which did not come up in our search for “natural language processing”, but is arguably his most influential text with 18,934 citations. Even though Prof. Manning only has four papers in our list (plus many more not on our list), his citation count is far and away the highest. If we’re looking for the most influential NLP expert, it would seem that Manning is a good candidate.

Of course, this raises the question of whether our list has other instances of authors that are listed under multiple different names. The answer is almost certainly yes. However, given that we have nearly 2,000 different authors, for now we’ll set that question aside rather than try to research all of them.

Learning from Experts

If the number of NLP papers being published each year is any indication, it would seem that NLP is a growing field. Here, we have only scratched the surface, both in terms of number of papers we analyzed and type of analysis. However, if you’re interested in learning NLP, perhaps this data will provide you with a useful starting point. So, get reading, and let us know what you think!