OK, you may not be Vince Clortho, Keymaster of Gozer, but you can still master the art of extracting keywords from texts! (Not nearly as exciting, I know, but still useful!) In this article we’ll talk about statistical, graph-based, algorithmic, and machine learning techniques to identify keywords and keyphrases in texts.

A keyword is a word that represents the most important ideas in a text. Keywords are useful for information retrieval systems, extractive summarization, classification tasks, and more. Keywords also provide a useful means of quickly comparing texts, with the idea that texts with very similar keywords are likely to be similar in their totality. Identifying these words is by no means easy though, and different techniques can produce very different results. Further, what makes a “good keyword” is a subjective question, and the type of text you’re working on makes a difference. For that reason, it’s useful to understand the various techniques, and experiment with which ones are best suited to your needs.

In this article we’ll use three categories of texts: news articles, scientific articles, and books. As you’ll see, the types of keywords we extract from each will vary in usefulness, particularly as texts get longer. For the sake of reproducibility, we’ll use datasets from Huggingface for the news and scientific articles, and books from Project Gutenberg. To keep things interesting, we’ll save details about what these texts are actually about until the end. Let’s get started by downloading our texts.

from datasets import load_datasetimport requestsimport re

def read_text_from_url(url: str, encoding: str = "utf-8") -> str: """ Reads text content from a URL with specified encoding.

Args: url (str): The URL of the text file to read encoding (str, optional): The encoding to use when reading the text. Defaults to 'utf-8'.

Returns: str: The text content from the URL

Raises: requests.RequestException: If the request fails """ try: response = requests.get(url) response.raise_for_status() # Raise an exception for bad status codes response.encoding = encoding # Set the encoding return response.text except requests.RequestException as e: print(f"Error fetching text from URL: {e}") raise

def read_gutenberg_url(url: str, encoding: str = "utf-8") -> str: """ Reads text content from a Project Gutenberg URL with specified encoding.

Args: url (str): The URL of the text file to read encoding (str, optional): The encoding to use when reading the text. Defaults to 'utf-8'.

Returns: str: The text content from the URL """ try: text = read_text_from_url(url, encoding) # Remove Project Gutenberg header and footer start = re.search(r"\*\*\* START OF THE PROJECT GUTENBERG EBOOK.*?\*\*\*", text, re.DOTALL) end = re.search(r"\*\*\* END OF THE PROJECT GUTENBERG EBOOK.*?\*\*\*", text, re.DOTALL) if start and end: text = text[start.end():end.start()].strip() return text except Exception as e: print(f"Error reading Project Gutenberg URL: {e}") raise

# load news and science datasets from Huggingfacenews = load_dataset("cnn_dailymail", "3.0.0", split="train[:2]")news = news.rename_column("article", "text")news = news.rename_column("highlights", "summary")

science = load_dataset("ccdv/arxiv-summarization", split="train[:2]")science = science.rename_column('article', 'text')science = science.rename_column('abstract', 'summary')

# download books from Project Gutenbergbooks = [ { "url": "https://www.gutenberg.org/ebooks/43.txt.utf-8" }, { "url": "https://www.gutenberg.org/ebooks/5200.txt.utf-8" }]

for book in books: book["text"] = read_gutenberg_url(book["url"])

# combine all texts into a single listtexts = []for article in news: texts.append({"category": "news", "text": article["text"], "summary": article["summary"]})for article in science: texts.append({"category": "science", "text": article["text"], "summary": article["summary"]})for book in books: texts.append({"category": "book", "text": book["text"], "summary": "N/A"})

# print length of each textfor idx, text in enumerate(texts): print(f"Text {idx + 1} ({text['category']}): {len(text['text'])} characters")Text 1 (news): 2527 charactersText 2 (news): 4051 charactersText 3 (science): 26346 charactersText 4 (science): 17929 charactersText 5 (book): 141352 charactersText 6 (book): 120348 charactersKeywords by Frequency

The first, and perhaps most obvious approach to identifying keywords, is to simply identify the most common words in each text. We can do this by building a bag of words for each text, and representing our frequency data as a term-document matrix, where each row is a text and each column shows the frequency of a word in the combined vocabulary. (For more detail on this topic, check out the article [How Does a Computer Learn to Read](/blog/how-does-a-computer-learn-to-read TODO)). For the sake of consistency, we’re going to use spaCy for tokenization, and identifying stopwords and punctuation.

import spacyimport numpy as npfrom tqdm import tqdm

# load the spaCy modelnlp = spacy.load('en_core_web_sm', disable=['parser', 'ner', 'lemmatizer', 'tagger'])

# process each text with the spaCy modelfor text in tqdm(texts): text["doc"] = nlp(text["text"])

print("Text lengths in words:")for idx, text in enumerate(texts): cat = text["category"] print(f"Text {idx + 1} ({cat}) length: {len(text['doc'])}")Text lengths in words:Text 1 (news) length: 555Text 2 (news) length: 836Text 3 (science) length: 4675Text 4 (science) length: 3477Text 5 (book) length: 33026Text 6 (book) length: 26788Now that we have our spaCy documents, the next step is to extract tokens, or perhaps more accurately “candidates” for possible keywords or keyphrases. To do this, we can use the attributes spaCy adds to each token to filter out punctuation. (We could, and probably should, also filter out stopwords, but for reasons that will become clear, we’ll leave them in for now.)

One thing we should consider right away is the difference between a keyword and a keyphrase. A keyword, as you might expect is just a single word, whereas a keyphrase could be multiple words. In many cases, the keyphrases are actually going to be more informative for a text. For example, if we have a scientific document that repeatedly uses the phrase “quantum entaglement”, knowing that is a keyphrase is likely more useful than seeing the keywords “quantum” and “entaglement” on their own—particularly if our extraction method only returns one of those words.

In order to get keyphrases, we’ll include ngram functionality in our tokenization method. An ngram is a group of adjacent words in a text, so in the sentence “Quantum entaglement is a phenomenon where two particles become linked, regardless of distance” the ngrams would include “quantum entaglement”, “entaglement is”, “is a”, and so on. These examples are bigrams (ngrams with 2 words), but an ngram could be any number of words. Typically, it’s useful to keep the minimum and maximum values of n relatively low, so we’ll use the range of (2, 4) in our examples.

def get_tokens( doc: spacy.tokens.Doc, with_stopwords: bool = False, with_punct: bool = False, min_n: int = 1, max_n: int = 1) -> list[str]: """ Get candidate tokens and n-grams from a spaCy Doc based on filtering criteria.

Args: doc (spacy.tokens.Doc): Input spaCy Doc with_stopwords (bool, optional): Include stopwords. Defaults to False. with_punct (bool, optional): Include punctuation. Defaults to False. min_n (int, optional): Minimum n-gram length. Defaults to 1. max_n (int, optional): Maximum n-gram length. Defaults to 1.

Returns: list[str]: List of candidate tokens and n-grams """ tokens = [] for token in doc: if token.is_space: continue if not with_stopwords and token.is_stop: continue if not with_punct and token.is_punct: continue tokens.append(token.text.lower())

# generate n-grams # if range is (1, 1), this will return unigrams (single words) candidates = [] for n in range(min_n, max_n + 1): for i in range(len(tokens) - n + 1): ngram = " ".join(tokens[i:i + n]) candidates.append(ngram)

return candidates

def build_vocab( token_lists: list[list[str]]) -> list[str]: """ Build vocabulary from lists of tokens.

Args: token_lists (list[list[str]]): Lists of tokens to build vocab from

Returns: list[str]: Sorted list of unique tokens """ vocab = set() for tokens in token_lists: vocab.update(tokens)

return sorted(vocab)

# tokenize each text and build a joint vocabularyfor text in texts: text["tokens"] = get_tokens( text["doc"], with_stopwords=True, with_punct=False, min_n=1, max_n=1 )

# build joint vocabulary and helper maps between words and indicesvocab = build_vocab([text["tokens"] for text in texts])stoi = {word: idx for idx, word in enumerate(vocab)}itos = {idx: word for idx, word in enumerate(vocab)}

print("Vocabulary sizes in unique words:")print(f"Corpus: {len(vocab)}")for idx, text in enumerate(texts): cat = text["category"] vocab_size = len(set(text["tokens"])) print(f"Text {idx + 1} ({cat}): {vocab_size}")Vocabulary sizes in unique words:Corpus: 6374Text 1 (news): 270Text 2 (news): 330Text 3 (science): 978Text 4 (science): 888Text 5 (book): 3939Text 6 (book): 2577Note that as we tokenize our texts, we also built a joint vocabulary across all six texts. We’ll come back to this shortly, but the reason is that identifying keywords in one document sometimes requires knowing how a word is or is not used in other documents. Now that we have our tokenized texts, and our vocab, we can get the word frequencies from each text, and pull out the keywords as the most common words in the texts.

def get_token_frequencies( tokens: list[str], vocab: list[str], vocab_stoi_map: dict[str, int] ) -> np.ndarray: """ Computes the frequency of each word in a spaCy Doc.

Args: tokens (list[str]): The list of tokens vocab (list[str]): The vocabulary list vocab_stoi_map (dict[str, int]): A dictionary mapping words to indices in the vocabulary

Returns: np.ndarray: The frequency of each word in the vocabulary """ frequencies = np.zeros(len(vocab), dtype=int) for token in tokens: if token.lower() in vocab_stoi_map: idx = vocab_stoi_map[token.lower()] frequencies[idx] += 1 return frequencies

# build the term-document matrix for the textstdm = np.array([get_token_frequencies(text["tokens"], vocab, stoi) for text in texts])tdmarray([[ 1, 3, 1, ..., 0, 0, 0], [ 0, 0, 0, ..., 0, 0, 0], [ 19, 0, 0, ..., 0, 0, 0], [ 0, 0, 0, ..., 0, 0, 0], [ 0, 0, 0, ..., 2, 140, 3], [ 0, 0, 0, ..., 11, 194, 9]], shape=(6, 6374))Before pulling out the most common keywords, let’s look at the term-document matrix (TDM) we created. The TDM provides counts of every word in the vocabulary for all the texts. This provides a handy way of comparing word usage across texts. Let’s check how a few words differ in frequency.

words = ["apple", "forest", "london", "president"]word_indices = [stoi[word] for word in words]

print("Word frequencies by document category:")print("-" * 50)

cat_width = max(len(text["category"]) for text in texts)word_width = max(len(word) for word in words)

header = f"{'Category':<{cat_width}}"for word in words: header += f" {word:>{word_width}}"print(header)print("-" * (cat_width + (word_width + 2) * len(words)))

for idx, text in enumerate(texts): cat = text["category"] frequencies = tdm[idx, word_indices] row = f"{cat:<{cat_width}}" for freq in frequencies: row += f" {freq:>{word_width}}" print(row)Word frequencies by document category:--------------------------------------------------Category apple forest london president---------------------------------------------------news 0 0 1 0news 0 0 0 1science 0 0 0 0science 0 0 0 0book 0 1 12 0book 5 0 0 0Checking individual words is useful if you know what you’re looking for, but our real goal in this article is to identify keywords that we don’t know ahead of time. Using the TDM, we can now pull out the most frequent words for each text.

def get_top_keywords( tdm: np.ndarray, vocab: list[str], n_keywords: int = 5) -> list[list[str]]: """ Extracts the top n keywords from a term-document matrix.

Args: tdm (np.ndarray): A term-document matrix vocab (list[str]): The vocabulary list n_keywords (int, optional): The number of keywords to extract. Defaults to 5.

Returns: list[list[str]]: The top n keywords for each document """ keywords = [] for row in tdm: indices = np.argsort(row)[::-1][:n_keywords] keywords.append([vocab[idx] for idx in indices]) return keywords

def print_keywords_lists( keyword_lists: list[list[str]], texts: list[dict], bulleted: bool = False ): """ Prints a list of keywords for each document.

Args: keyword_lists (list[list[str]]): A list of keyword lists texts (list[dict]): The list of text dictionaries bulleted (bool, optional): Print the keywords as a bulleted list. Defaults to False. """ for idx, keywords in enumerate(keyword_lists): cat = texts[idx]["category"] if bulleted: print(f"Text {idx + 1} ({cat}):") for keyword in keywords: print(f" - {keyword}") print("") else: print(f"Text {idx + 1} ({cat}): {', '.join(keywords)}")

tdm_keywords = get_top_keywords(tdm, vocab, n_keywords=5)

print("Top keywords by frequency:")print_keywords_lists(tdm_keywords, texts)Top keywords by frequency:Text 1 (news): the, he, to, of, aText 2 (news): the, in, to, and, theyText 3 (science): the, of, is, and, aText 4 (science): the, of, and, in, isText 5 (book): the, and, of, to, iText 6 (book): the, to, and, he, hisWell. That’s disappointing. Every single one of our keywords by frequency are stopwords like “and”, “the”, and “to”. This is of course exactly what we expected (and the reason we could/should have filtered stopwords out before building our vocabulary), but it illustrates an important weakness of frequency-based keyword methods. Perhaps the keyphrases will tell us something more.

# calculate (2, 4)-grams for each text``for text in texts: text["ngrams"] = get_tokens( text["doc"], with_stopwords=True, with_punct=False, min_n=2, max_n=4 )

# build joint ngram vocabulary and helper maps between ngrams and indicesngram_vocab = build_vocab([text["ngrams"] for text in texts])ngram_stoi = {ngram: idx for idx, ngram in enumerate(ngram_vocab)}ngram_itos = {idx: ngram for idx, ngram in enumerate(ngram_vocab)}

print(f"Corpus ngram vocabulary size (2, 4): {len(ngram_vocab)}\n")

# build the term-document matrix for the ngramsngram_tdm = np.array( [get_token_frequencies(text["ngrams"], ngram_vocab, ngram_stoi) for text in texts])ngram_keywords = get_top_keywords(ngram_tdm, ngram_vocab, n_keywords=5)

print("Top keyphrases by frequency:")print_keywords_lists(ngram_keywords, texts, True)Corpus ngram vocabulary size (2, 4): 137230

Top keyphrases by frequency:Text 1 (news): - harry potter - he told - in a - of the - he has

Text 2 (news): - mentally ill - it 's - leifman says - on the - forgotten floor

Text 3 (science): - of the - in the - learning rates - @xmath159 quantile - for the

Text 4 (science): - of the - in the - is the - and the - et al

Text 5 (book): - of the - in the - it was - and the - mr. utterson

Text 6 (book): - gregor 's - of the - he had - it was - in theThe keyphrases do seem to be a bit more informative. The first article has a pretty big give-away with the term “Harry Potter”, suggesting it’s about entertainment. The second article seems to be related to mental health with a phrase like “mentally ill” being so common. The term “learning rates” is certainly interesting in the third text—maybe it’s about machine learning or math? The fourth text has zero useful clues, but the two books appear to reveal the names of characters. But who are Mr. Utterson and Gregor? Surely there has to be a better way!

TF-IDF Keywords

The most common statistical method to select keywords from documents is known as term frequency-inverse document frequency (TF-IDF). The advantage of the TF-IDF model over the bag-of-words approach is that TF-IDF uses a weighting factor to give greater weight to words that appear frequently in one document, but rarely in other documents. As a result, TF-IDF assigns very low weights to stopwords like “the” or “and” because they tend to appear very frequently in all documents, whereas unique words such as scientific terms or character names may only appear in a few documents and thus have greater weight.

As with our TDM, the rows in a TF-IDF matrix are documents and the columns are words. However, in the TDM the values were word frequencies, and in the TF-IDF matrix the values will be the term frequency (TF) multiplied by the inverted document frequency (IDF). We can calculate with the below formula, where is the term, is the document, and is the total number of terms in the document, and is the raw count of term in .

We then calculate (the logarithm of the inverse fraction of documents that contain a word) where is the corpus (set of documents), and is the total number of documents.

Together, this gives us the final formula for TF-IDF:

Don’t worry too much about the math, the main point is that words that are common across the whole corpus are down-weighted, and words that only appear in one or a few documents are up-weighted. We can calculate this in code using our existing TDMs, as shown below.

def calculate_tfidf(tdm: np.ndarray) -> np.ndarray: """ Calculate TF-IDF matrix from a term-document matrix.

Args: tdm (np.ndarray): Term-document matrix

Returns: np.ndarray: TF-IDF values """ n_documents, n_terms = tdm.shape tfidf = np.zeros_like(tdm, dtype=float)

for term_idx in range(n_terms): docs_with_term = sum(1 for doc_freq in tdm[:, term_idx] if doc_freq > 0) idf = np.log(n_documents / (docs_with_term + 1)) tfidf[:, term_idx] = tdm[:, term_idx] * idf

return tfidf

tfidf = calculate_tfidf(tdm)tfidfarray([[ 0.69314718, 3.29583687, 1.09861229, ..., 0. , 0. , 0. ], [ 0. , 0. , 0. , ..., 0. , 0. , 0. ], [ 13.16979643, 0. , 0. , ..., 0. , 0. , 0. ], [ 0. , 0. , 0. , ..., 0. , 0. , 0. ], [ 0. , 0. , 0. , ..., 1.38629436, 97.04060528, 2.07944154], [ 0. , 0. , 0. , ..., 7.62461899, 134.47055303, 6.23832463]], shape=(6, 6374))Note that our TF-IDF matrix has the same shape as the TDM: one row per document in our corpus and one column per word in the shared vocabulary. However, unlike the TDM, the values are decimal numbers, with each representing the TF-IDF score for that term/document combination. Let’s see what keywords we get using the TF-IDF matrix.

tfidf_keywords = get_top_keywords(tfidf, vocab, n_keywords=5)

print("Top keywords by TF-IDF:")print_keywords_lists(tfidf_keywords, texts)Top keywords by TF-IDF:Text 1 (news): potter, radcliffe, reuters, 'll, harryText 2 (news): leifman, mentally, 's, miami, jailText 3 (science): additive, kernel, quantile, @xcite, assumptionText 4 (science): tag, @xcite, mass, st, mevText 5 (book): utterson, my, i, hyde, jekyllText 6 (book): gregor, she, 's, sister, heMuch more informative! Due to the down-weighting of stopwords and other very common terms, our TF-IDF keywords are significantly more interesting. We now see what appear to be proper nouns in texts 1, 5, and 6, and some unusual words like “jail” in text 2 or “kernel” and “quantile” in text 3. We even get some big give-aways like the terms “hyde” and “jekyll” in one of our books. Let’s see what we get using the same approach with ngrams.

ngram_tfidf = calculate_tfidf(ngram_tdm)

ngram_tfidf_keywords = get_top_keywords(ngram_tfidf, ngram_vocab, n_keywords=5)

print("Top keyphrases by TF-IDF:")print_keywords_lists(ngram_tfidf_keywords, texts, True)Top keyphrases by TF-IDF:Text 1 (news): - harry potter - this year - this year he - potter and the order - potter and the

Text 2 (news): - leifman says - mentally ill - it 's - the forgotten floor - the forgotten

Text 3 (science): - learning rates - @xmath159 quantile - quantile regression - loss function - given by

Text 4 (science): - et al - the tag - the leptonic - the st - leptonic decay

Text 5 (book): - mr. utterson - the lawyer - of my - i was - it was

Text 6 (book): - gregor 's - his sister - his father - he had - he wasLooking at the keywords and keyphrases from TF-IDF, it’s increasingly clear what these documents are about. Text 1 seems to be about Harry Potter and the Order of the Phoenix, text 2 is still a bit unclear but seems to be about mental health, text 3 looks like it’s about machine learning with terms like “loss function” and “learning rates”, text 4 seems to be about physics or astronomy, and for the books we have some solid hints with character names.

Now that we’ve seen a few statistical approaches at work, let’s take a look at something slightly different: a graph-based approach.

Co-occurence Graph

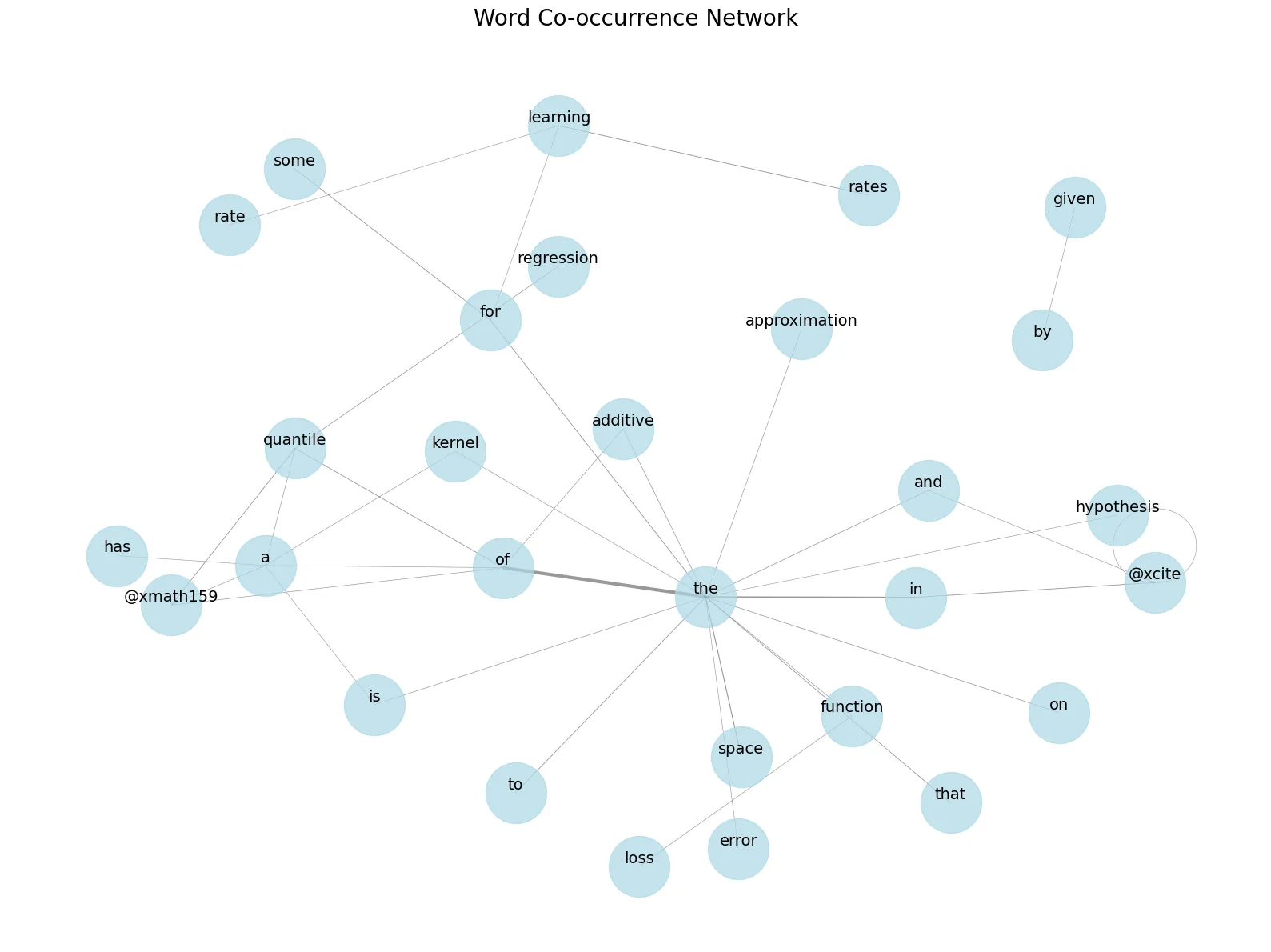

We won’t spend too much time on this one, but it’s worth briefly exploring graph-based approaches to keyword extraction, if only to see that this is a hard problem with many different solutions. Imagine that each unique word in a document is a node on a graph, and the edges of the graph count the number of times that two words “co-occur”—that is, appear within a few words of one another. If we build a graph like this, we can then use a graph algorithm like PageRank (the same algorithm used by Google to rank webpage search results) to see which nodes in the graph are most important to the overall network. The top nodes would be our keywords. Let’s see it in action.

import networkx as nxfrom collections import Counter

def build_cooccurrence_graph(tokens: list[str], window_size: int = 3) -> nx.Graph: """ Build a graph of word co-occurrences from a list of tokens.

Args: tokens (list[str]): The list of tokens window_size (int): The size of the co-occurrence window. Defaults to 3.

Returns: nx.Graph: A graph where nodes are words and edges represent co-occurrences """ co_occurrences = Counter() for i in range(len(tokens)): for j in range(i + 1, min(i + window_size, len(tokens))): pair = tuple(sorted([tokens[i], tokens[j]])) co_occurrences[pair] += 1

G = nx.Graph() for (w1, w2), weight in co_occurrences.items(): G.add_edge(w1, w2, weight=weight)

return G

def get_keywords_from_graph(G: nx.Graph, n: int = 5) -> list[str]: """ Extract keywords from a word co-occurrence graph using PageRank.

Args: G (nx.Graph): The word co-occurrence graph n (int): The number of top keywords to return. Defaults to 5.

Returns: list[str]: A list of the top keywords """ pagerank_scores = nx.pagerank(G, weight='weight') keywords = sorted(pagerank_scores, key=pagerank_scores.get, reverse=True)[:n]

return keywords

graph_keywords = []for text in texts: g = build_cooccurrence_graph(text["tokens"], window_size=3) keywords = get_keywords_from_graph(g, n=5) graph_keywords.append(keywords)

print("Top keywords by graph-based keyword extraction:")print_keywords_lists(graph_keywords, texts)print("")

ngram_graph_keywords = []for text in texts: g = build_cooccurrence_graph(text["ngrams"], window_size=3) keywords = get_keywords_from_graph(g, n=5) ngram_graph_keywords.append(keywords)

print("Top keyphrases by graph-based keyword extraction:")print_keywords_lists(ngram_graph_keywords, texts)Top keywords by graph-based keyword extraction:Text 1 (news): the, he, his, a, toText 2 (news): the, in, to, and, theyText 3 (science): the, of, is, and, aText 4 (science): the, of, and, is, inText 5 (book): the, and, of, a, toText 6 (book): the, to, and, he, his

Top keyphrases by graph-based keyword extraction:Text 1 (news): harry potter, in a, he told, of the, actor says heText 2 (news): leifman says, it 's, mentally ill, on the, the forgotten floorText 3 (science): of the, in the, learning rates, for the, for someText 4 (science): of the, is the, in the, and the, the tagText 5 (book): of the, in the, it was, and the, of aText 6 (book): gregor 's, of the, he had, it was, in theAs with our TDM, the keywords we get are all stopwords, though the keyphrases give us a bit more information. This is not surprising, given that our rankings are based on edge weight, and as the most frequent words, stopwords are likely to have the most co-occurrences and thus the highest weight. (In fairness, similar to the TDM, we really should remove stopwords in a real example.) However, co-occurrence graphs have a distinct advantage over TDMs, because we can plot them!

import matplotlib.pyplot as plt

def visualize_word_network( G: nx.Graph, figsize: tuple[int, int] = (20, 16), min_edge_weight: int = 2, seed: int = 42): """ Visualize a word co-occurrence network.

Args: G (networkx.Graph): Graph of word co-occurrences figsize (tuple): Figure size (width, height) min_edge_weight (int): Minimum edge weight to display seed (int): Random seed for layout initialization """ # filter edges and nodes based on weight edges_to_remove = [(u, v) for u, v, w in G.edges(data='weight') if w < min_edge_weight] G_filtered = G.copy() G_filtered.remove_edges_from(edges_to_remove) G_filtered.remove_nodes_from(list(nx.isolates(G_filtered)))

if len(G_filtered) == 0: print("No nodes to display. Adjust the minimum edge weight.") return

# visualize the network pos = nx.spring_layout(G_filtered, k=2, iterations=50, seed=seed) plt.figure(figsize=figsize) nx.draw_networkx_nodes(G_filtered, pos, node_color='lightblue', node_size=3000, alpha=0.7)

edge_weights = [G_filtered[u][v]['weight'] for u,v in G_filtered.edges()] max_weight = max(edge_weights) scaled_weights = [3*w/max_weight for w in edge_weights]

nx.draw_networkx_edges(G_filtered, pos, width=scaled_weights, alpha=0.4)

pos_attrs = {} for node, coords in pos.items(): pos_attrs[node] = (coords[0], coords[1] + 0.02)

nx.draw_networkx_labels(G_filtered, pos_attrs, font_size=14)

plt.title("Word Co-occurrence Network", fontsize=20, pad=20) plt.axis('off') plt.tight_layout() plt.show()

visualize_word_network( build_cooccurrence_graph(texts[2]["tokens"], window_size=3), figsize=(16, 12), min_edge_weight=12)

In the above example, we are plotting all of the nodes from text 3 that have edges with a weight of at least 12. These are the same words that would have appeared if we had set the number of keywords much higher, so we’re cheating a bit here to learn more about text 3 than we did before, but the visualization is still useful to see which words are most common, and how they relate to one another.

Although we’ve made some interesting discoveries thus far, all of our approaches have some significant downsides. The TDM and graph-based approaches are interesting, but without filtering stopwords they don’t actually reveal much. The TF-IDF approach is more revealing, but it relies on processing a full corpus of documents and comparing them, rather that acting on a single document on its own. To improve our keyword extraction, we’ll now explore a few approaches that will hopefully produce more interesting results without requiring filtering or multiple documents.

Entropy to the Rescue!

In a [previous article](/blog/understanding-entropy TODO) we investigated how entropy can help us understand the information content in a particular text. It stands to reason that the words or phrases that contribute the most entropy to a text might also be useful keywords. Even better, this is something we can calculate for words in a single document, using a pre-calculated estimate of the probability distribution of words in a particular language, meaning we don’t have to rely on comparing documents across a full corpus. Fortunately, spaCy has lookup tables that we can use to get the probability of a given token (independent of surrounding tokens), and then use to calculate information content and entropy contributions. Let’s take a look at what keywords this gives us on our texts.

from spacy.lookups import load_lookups

def get_spacy_probability(token: str, nlp: spacy.Language) -> float: """ Get the probability of a word in the spaCy language model.

Args: token (string): The word to get the probability for nlp (spacy.Language): The spaCy language model

Returns: The probability of the word (float) """ _ = nlp.vocab[token] ln_prob = nlp.vocab[token].prob prob = np.exp(ln_prob)

return prob

def word_entropy_df(tokens: list[str]) -> dict: # load the spaCy language model with lookups nlp = spacy.load('en_core_web_sm', disable=['parser', 'ner', 'lemmatizer', 'tagger']) lookups = load_lookups("en", ["lexeme_prob"]) nlp.vocab.lookups.add_table("lexeme_prob", lookups.get_table("lexeme_prob"))

# calculate entropy measures measures = [] for token in tokens: joint_probability = 1 subtokens = token.split(" ")

# if token is an ngram, split and use joint probability # assume independence between subtokens, even though # this is not accurate in the real world for subtoken in subtokens: prob = get_spacy_probability(subtoken, nlp) joint_probability *= prob

information_content = -np.log2(joint_probability) entropy_contribution = joint_probability * information_content measures.append({ "token": token, "joint_probability": joint_probability, "information_content": information_content, "entropy_contribution": entropy_contribution })

return pd.DataFrame(measures)

entropy_keywords = []entropy_keyphrases = []

for text in tqdm(texts): word_df = word_entropy_df(text["tokens"])

keywords = word_df.sort_values( "entropy_contribution", ascending=True )["token"].drop_duplicates().tolist()[:5] entropy_keywords.append(keywords)

ngram_df = word_entropy_df(text["ngrams"]) keyphrases = ngram_df.sort_values( "entropy_contribution", ascending=True )["token"].drop_duplicates().tolist()[:5] entropy_keyphrases.append(keyphrases)

print("Top keywords by entropy contribution:")print_keywords_lists(entropy_keywords, texts)print("")

print("Top keyphrases by entropy contribution:")print_keywords_lists(entropy_keyphrases, texts, True)Top keywords by entropy contribution:Text 1 (news): rudyard, shaffer, kipling, equus, radcliffeText 2 (news): leifman, --charges, soledad, o'brien, pretrialText 3 (science): @xmath0, rkhs, @xmath18, @xmath17, @xmath22Text 4 (science): @xmath55, @xmath2, ikado, @xmath33, lqcdText 5 (book): mr., respectors, utterson, blackguardly, amitiesText 6 (book): wyllie, charlottenstrasse, mr., mrs., family”—here

Top keyphrases by entropy contribution:Text 1 (news): - potter star daniel radcliffe - london england reuters harry - england reuters harry potter - peter shaffer 's equus - shaffer 's equus meanwhile

Text 2 (news): - miami dade pretrial detention - dade pretrial detention facility - miami dade county jails - soledad o'brien takes users - judge steven leifman says

Text 3 (science): - \mbox{~~and~~ q\bigl([t \infty)\bigr \ge - q\bigl([t \infty)\bigr \ge 1-\tau\bigr\}\,.\ - @xcite @xcite @xcite @xcite - \ge \tau \mbox{~~and~~ q\bigl([t - @xmath168\bigr \ge \tau \mbox{~~and~~

Text 4 (science): - @xcite @xmath156 gev@xmath157 @xmath158 - @xmath122 @xmath123 @xmath124 @xmath125 - @xmath121 @xmath122 @xmath123 @xmath124 - @xmath123 @xmath124 @xmath125 @xmath126 - @xmath156 gev@xmath157 @xmath158 mev

Text 5 (book): - dr. jekyll's mr. utterson - m.d. d.c.l. l.l.d. f.r.s. - jekyll m.d. d.c.l. l.l.d. - carew hastie lanyon henry - mr. enfield dr. jekyll

Text 6 (book): - mr. samsa let 's - tearfully thanking gregor 's - collar mr. samsa shouted - of excusal mr. samsa - mr. and mrs. samsaUsing entropy contribution as our measure for keywords we get some very interesting results, even without having to analyze an entire corpus or filter stopwords! The results are arguably as useful as those from the TF-IDF method, insofar as they reveal proper nouns like names of characters. Amusingly, in the scientific articles, the keywords all appear to be drawn from LaTeX formulas, which isn’t particularly useful, but it tells us at least that these are scientific documents.

So far we have primarily been using statistical measures to identify keywords. But that’s not the only approach. As we continue our exploration, let’s look at the final two approaches: algorithmic, and modeling.

Raking Up the Keywords

When it comes to algorithmic approaches to keywords extraction, there are a ton of methods—far too many to look at here. So, we’ll just take a quick look at one of the more famous methods: Rapid Automatic Keyword Extraction (RAKE). This approach was developed by Rose et al. (2010) in their paper Automatic Keyword Extraction from Individual Documents. The algorithm is fairly complex, and worthy of an entire article on its own, but it boils down to the following basic steps:

-

Split the text into “candidate phrases” with delimiters at stopwords and punctuation. So, the text

"I am studying machine learning and statistics. I think statistics is really interesting."splits at stopwords like"I","am","is", and at punctuation like"."to become candidate phrases['studying machine learning', 'statistics', 'think statistics', 'interesting']. -

Build a co-occurrence graph of words in candidate phrases, where words are nodes and edges go between any words that appear in the same candidate phrases. In the above example, this would link “studying” to both “machine” and “learning”.

-

Compute word scores by calculating the degree of each word (number of connections), frequency (number of appearances in the text), and the degree-frequency ratio.

-

Compute phrase scores as the sum of the word scores in the phrase.

-

Rank and extract keywords.

As you might have guessed from the algorithm steps, RAKE is focused on producing keyphrases only, because the weighting scheme requires looking at how many phrases a word appears in. For this reason, we can’t extract keywords alone. Now that we know the algorithm, we can implement it in Python, using spaCy documents to help us identify punctuation and stopwords to use during candidate phrase construction.

def get_rake_phrases(doc: spacy.language.Doc) -> list[list[str]]: """ Extract candidate phrases from a spaCy Doc using the RAKE algorithm.

Args: doc (spacy.language.Doc): The input spaCy Doc

Returns: list[list[str]]: A list of candidate phrases consisting of lists of tokens per phrase """ phrases = [] current_phrase = [] for token in doc: if token.is_stop or token.is_punct or token.is_space: if current_phrase: phrases.append(current_phrase) current_phrase = [] else: current_phrase.append(token.text.lower())

if current_phrase: phrases.append(current_phrase)

return phrases

def get_rake_word_score(phrases): """ Calculate word scores using RAKE algorithm.

Args: phrases (list[list[str]]): List of phrases

Returns: dict: Dictionary of word scores """ word_freq = Counter() word_degree = {}

for phrase in phrases: for word in phrase: word_freq[word] += 1 word_degree[word] = word_degree.get(word, 0) + len(phrase)

word_scores = {} for word in word_freq: word_scores[word] = word_degree[word] / word_freq[word]

return word_scores

def get_rake_phrase_scores(phrases, word_scores): """ Calculate phrase scores using RAKE algorithm.

Args: phrases (list[list[str]]): List of phrases word_scores (dict): Dictionary of word scores

Returns: dict: Dictionary of phrase scores """ phrase_scores = {} for phrase in phrases: phrase_scores[" ".join(phrase)] = sum(word_scores[word] for word in phrase)

return phrase_scores

rake_keyphrases = []

for text in tqdm(texts): phrases = get_rake_phrases(text["doc"]) word_scores = get_rake_word_score(phrases) phrase_scores = get_rake_phrase_scores(phrases, word_scores) top_phrases = sorted(phrase_scores, key=phrase_scores.get, reverse=True)[:5] rake_keyphrases.append(top_phrases)

print("Top keyphrases by RAKE:\n")print_keywords_lists(rake_keyphrases, texts, True)Top keyphrases by RAKE:

Text 1 (news): - harry potter star daniel radcliffe gains access - massive sports car collection - uk box office chart - reported £ 20 million - kid star goes

Text 2 (news): - soledad o'brien takes users inside - leifman says 200 years ago people - ninth floor severely mentally disturbed - heavy wool sleeping bag - dade pretrial detention facility

Text 3 (science): - uniformly lipschitz continuous satisfying @xmath14 - shifted loss function @xmath17 introduced - integral operator @xmath117 satisfies @xmath242 - standard gaussian rbf kernel @xmath63 - reproducing kernel hilbert space @xmath0

Text 4 (science): - @xmath40 @xmath41 containing @xmath42 @xmath3 pairs - degree chebyshev polynomial background function - layer stereo wire drift chamber - gaussian signal function plus second - rich detector covers approximately @xmath52

Text 5 (book): - disordered sensual images running like - good qualities seemingly unimpaired - fast drawers stood open - circulation like mr. enfield - haired old woman opened

Text 6 (book): - grey earth mingled inseparably - electric street lamps shone - leaving vile brown flecks - mr. samsa twisted round - visit gregor relatively soonAs you can see, RAKE produces very different results from our previous keyphrase extractions. In the news articles, we now get a much better sense of the content. For example, the first article doesn’t just seem to be about Harry Potter the franchise, but about the star actor’s income and sports car collection. In the books however, these phrases actually seem to tell us less than we knew before. The phrases are poetic and descriptive, but they don’t really seem to tell us much about the content of the books (though some characters are named). As with any keyphrase detection method, RAKE has its limitations, especially in creative works where the very definition of a “keyphrase” is up for debate.

KeyBERT

In our final section, we’ll take a look at the last major category of keyword/phrase extractors: machine learning models. The advantage of using machine learning models is that it gives us the option of creating and training a model to recognize what we consider to be a keyphrase. Given a sufficient amount of annotated training data, you could create a custom model for keywords.

In our example, we’re going to take a slightly different approach though and leverage the power of [word embeddings](blog/how-does-a-computer-learn-to-read TODO). The basic idea is that we can identify keywords or phrases by creating a word embedding for each candidate and a document embedding for the text as a whole, and then finding the candidates that have the closest cosine similarity to the overall document embedding. The intuition here is that words with the closest similarity to the document are likely to be the most representative of the document.

To implement this approach, we’ll use an adapted version of code developed by Maarten Grootendorst, who also developed the excellent KeyBERT library.

from sentence_transformers import SentenceTransformerfrom typing import Callable, Optionalfrom sklearn.metrics.pairwise import cosine_similarityimport itertools

def top_candidates( candidates: list[str], similarities: np.ndarray, n: int = 10, repeat: bool = False) -> list[str]: """ Gets the top n keywords based on similarity scores.

Args: candidates (List[str]): List of candidate words. similarities (np.ndarray): Cosine similarity scores. n (int): Number of keywords to return. repeat (bool): Whether to allow duplicate words.

Returns: List[str]: The top n keywords. """ top_indices = np.argsort(similarities[0])[::-1] # Sort in descending order keywords = []

if not repeat: for i in top_indices: keyword = candidates[i] if keyword not in keywords: keywords.append(keyword) if len(keywords) == n: break else: keywords = [candidates[i] for i in top_indices[:n]]

return keywords

def max_sum_sim( doc_embedding: np.ndarray, word_embeddings: np.ndarray, words: list[str], top_n: int, nr_candidates: int,) -> list[str]: """ Selects top_n words that are least similar to each other from nr_candidates.

Args: doc_embedding (np.ndarray): Embedding of the full document. word_embeddings (np.ndarray): Embeddings of the candidate words. words (List[str]): List of candidate words. top_n (int): Number of keywords to return. nr_candidates (int): Number of initial candidates to consider.

Returns: List[str]: The selected keywords. """ distances = cosine_similarity(doc_embedding, word_embeddings) distances_candidates = cosine_similarity(word_embeddings, word_embeddings)

words_idx = list(distances.argsort()[0][-nr_candidates:]) words_vals = [words[index] for index in words_idx] distances_candidates = distances_candidates[np.ix_(words_idx, words_idx)]

min_sim = np.inf candidate = None for combination in itertools.combinations(range(len(words_idx)), top_n): sim = sum( distances_candidates[i][j] for i in combination for j in combination if i != j ) if sim < min_sim: candidate = combination min_sim = sim

return [words_vals[idx] for idx in candidate]

def mmr( doc_embedding: np.ndarray, word_embeddings: np.ndarray, words: list[str], top_n: int, diversity: float,) -> list[str]: """ Uses Maximal Marginal Relevance (MMR) to select diverse keywords.

Args: doc_embedding (np.ndarray): Embedding of the full document. word_embeddings (np.ndarray): Embeddings of the candidate words. words (List[str]): List of candidate words. top_n (int): Number of keywords to return. diversity (float): Controls balance between relevance and diversity.

Returns: List[str]: The selected keywords. """ word_doc_similarity = cosine_similarity(word_embeddings, doc_embedding) word_similarity = cosine_similarity(word_embeddings)

keywords_idx = [np.argmax(word_doc_similarity)] candidates_idx = [i for i in range(len(words)) if i != keywords_idx[0]]

for _ in range(top_n - 1): candidate_similarities = word_doc_similarity[candidates_idx, :] target_similarities = np.max( word_similarity[candidates_idx][:, keywords_idx], axis=1 )

mmr = (1 - diversity) * candidate_similarities - diversity * target_similarities.reshape( -1, 1 ) mmr_idx = candidates_idx[np.argmax(mmr)]

keywords_idx.append(mmr_idx) candidates_idx.remove(mmr_idx)

return [words[idx] for idx in keywords_idx]

def get_bert_keywords( model: SentenceTransformer, text: str, tokens: list[str], n: int = 10, repeat: bool = False, diversification_fn: Optional[Callable] = None, diversification_params: Optional[dict] = None,) -> list[str]: """ Extracts keywords from a text using a BERT sentence embedding model.

Args: model (SentenceTransformer): A SentenceTransformer model. text (str): The input text. tokens (list[str]): List of candidate words. n (int): Number of keywords to return. repeat (bool): Whether to allow duplicate words. diversification_fn (Optional[Callable]): A diversification function. diversification_params (Optional[dict]): Parameters for the diversification function.

Returns: List[str]: The top n keywords. """ candidates_embeddings = model.encode(tokens) text_embedding = model.encode([text])

similarities = cosine_similarity(text_embedding, candidates_embeddings)

if diversification_fn: if not diversification_params: raise ValueError("Diversification function requires parameters.") return diversification_fn( text_embedding, candidates_embeddings, tokens, n, **diversification_params )

return top_candidates(tokens, similarities, n, repeat)

model = SentenceTransformer('distilbert-base-nli-mean-tokens')

bert_keywords = []bert_keyphrases = []

for text in tqdm(texts): keywords = get_bert_keywords( model, text["text"], text["tokens"], n=5, repeat=False ) bert_keywords.append(keywords)

keyphrases = get_bert_keywords( model, text["text"], text["ngrams"], n=5, repeat=False ) bert_keyphrases.append(keyphrases)

print("Top keywords by BERT:")print_keywords_lists(bert_keywords, texts)print("")

print("Top keyphrases by BERT:")print_keywords_lists(bert_keyphrases, texts, True)Top keywords by BERT:Text 1 (news): monday, dvds, fortune, birthday, gossipText 2 (news): jails, cnn, inmates, jail, prisonersText 3 (science): advantages, popularity, @xmath168\bigr, satisfied, improvedText 4 (science): physics, neutrinos, quark, fermi, quarksText 5 (book): jekyll”—he, jekyll's, murder, satan, funeralText 6 (book): sleepiness, sleep, slept, bed, nap

Top keyphrases by BERT:Text 1 (news): - $ 41.1 million fortune - harry potter star daniel - reuters harry potter star - his number one movie - first five potter films

Text 2 (news): - miami dade pretrial detention - county jails are mentally - many mentally ill inmates - jails are mentally ill - miami dade county jails

Text 3 (science): - good estimators in additive - theorem quantilethm is better - advantages of additive models - many nice mathematical properties - additive models which helps

Text 4 (science): - leptonic decay @xmath71 without - leptonic decay efficiency - leptonic decay efficiency hence - decays to lighter leptons - the leptonic decay efficiency

Text 5 (book): - dr. jekyll looking deathly - dr. jekyll 's disappearance - of henry jekyll dead - henry jekyll dead - of dr. jekyll's mr.

Text 6 (book): - excessive sleepiness after sleeping - his room crawling over - pain in bed - bed into a horrible - excessive sleepinessThe results of the BERT approach are pretty compelling, particularly with the keyphrases. Performance on the books is better—the topic of the first book in particular now seems quite obvious—but as before, selecting keyphrases becomes harder and harder as texts get longer. We do have a small issue though, particularly as seen in text 4 where the keyphrases are all very similar. As Grootendorst points out in his blog post on this topic, we need a way to diversify our selections. For this, he recommends max sum similarity or maximal marginal relevance. For the sake of brevity, I’ll leave that to you to try out, but the code is included above, and Grootendorst does an excellent job of explaining how these diversification functions work.

Final Thoughts

Over the course of this article we’ve seen that keyword and keyphrase extraction is a task with many approaches and each of them has their upsides and downsides. In practice, it’s probably best to try a few different methods to see what works for a particular type of document. In some cases, a simple frequency-based approach might actually be best (I recommend you filter out stopwords though—we didn’t do that here so that we could compare methods more effectively), and in others something more complex may be required.

OK, before we go, let’s print out our results next to each other so you can compare. Which do you think is best?

keyword_results = { "Frequency": tdm_keywords, "TF-IDF": tfidf_keywords, "Graph": graph_keywords, "Entropy": entropy_keywords, "BERT": bert_keywords,}

print("Keyword Analysis Results")for idx, text in enumerate(texts): print(f"\nText {idx + 1} ({text['category']}):") print("-" * 50)

for method, results in keyword_results.items(): keywords = results[idx] print(f"{method:25}: {', '.join(keywords)}")Keyword Analysis Results

Text 1 (news):--------------------------------------------------Frequency : the, he, to, of, aTF-IDF : potter, radcliffe, reuters, 'll, harryGraph : the, he, his, a, toEntropy : rudyard, shaffer, kipling, equus, radcliffeBERT : monday, dvds, fortune, birthday, gossip

Text 2 (news):--------------------------------------------------Frequency : the, in, to, and, theyTF-IDF : leifman, mentally, 's, miami, jailGraph : the, in, to, and, theyEntropy : leifman, --charges, soledad, o'brien, pretrialBERT : jails, cnn, inmates, jail, prisoners

Text 3 (science):--------------------------------------------------Frequency : the, of, is, and, aTF-IDF : additive, kernel, quantile, @xcite, assumptionGraph : the, of, is, and, aEntropy : @xmath0, rkhs, @xmath18, @xmath17, @xmath22BERT : advantages, popularity, @xmath168\bigr, satisfied, improved

Text 4 (science):--------------------------------------------------Frequency : the, of, and, in, isTF-IDF : tag, @xcite, mass, st, mevGraph : the, of, and, is, inEntropy : @xmath55, @xmath2, ikado, @xmath33, lqcdBERT : physics, neutrinos, quark, fermi, quarks

Text 5 (book):--------------------------------------------------Frequency : the, and, of, to, iTF-IDF : utterson, my, i, hyde, jekyllGraph : the, and, of, a, toEntropy : mr., respectors, utterson, blackguardly, amitiesBERT : jekyll”—he, jekyll's, murder, satan, funeral

Text 6 (book):--------------------------------------------------Frequency : the, to, and, he, hisTF-IDF : gregor, she, 's, sister, heGraph : the, to, and, he, hisEntropy : wyllie, charlottenstrasse, mr., mrs., family”—hereBERT : sleepiness, sleep, slept, bed, napkeyphrase_results = { "Frequency": ngram_keywords, "TF-IDF": ngram_tfidf_keywords, "Graph": ngram_graph_keywords, "Entropy": entropy_keyphrases, "RAKE": rake_keyphrases, "BERT": bert_keyphrases,}

print("Keyphrase Analysis Results")for idx, text in enumerate(texts): print(f"\nText {idx + 1} ({text['category']}):") print("-" * 50)

for method, results in keyphrase_results.items(): keywords = results[idx] print(f"{method:25}: {', '.join(keywords)}")Keyphrase Analysis Results

Text 1 (news):--------------------------------------------------Frequency : harry potter, he told, in a, of the, he hasTF-IDF : harry potter, this year, this year he, potter and the order, potter and theGraph : harry potter, in a, he told, of the, actor says heEntropy : potter star daniel radcliffe, london england reuters harry, england reuters harry potter, peter shaffer 's equus, shaffer 's equus meanwhileRAKE : harry potter star daniel radcliffe gains access, massive sports car collection, uk box office chart, reported £ 20 million, kid star goesBERT : $ 41.1 million fortune, harry potter star daniel, reuters harry potter star, his number one movie, first five potter films

Text 2 (news):--------------------------------------------------Frequency : mentally ill, it 's, leifman says, on the, forgotten floorTF-IDF : leifman says, mentally ill, it 's, the forgotten floor, the forgottenGraph : leifman says, it 's, mentally ill, on the, the forgotten floorEntropy : miami dade pretrial detention, dade pretrial detention facility, miami dade county jails, soledad o'brien takes users, judge steven leifman saysRAKE : soledad o'brien takes users inside, leifman says 200 years ago people, ninth floor severely mentally disturbed, heavy wool sleeping bag, dade pretrial detention facilityBERT : miami dade pretrial detention, county jails are mentally, many mentally ill inmates, jails are mentally ill, miami dade county jails

Text 3 (science):--------------------------------------------------Frequency : of the, in the, learning rates, @xmath159 quantile, for theTF-IDF : learning rates, @xmath159 quantile, quantile regression, loss function, given byGraph : of the, in the, learning rates, for the, for someEntropy : \mbox{~~and~~ q\bigl([t \infty)\bigr \ge, q\bigl([t \infty)\bigr \ge 1-\tau\bigr\}\,.\, @xcite @xcite @xcite @xcite, \ge \tau \mbox{~~and~~ q\bigl([t, @xmath168\bigr \ge \tau \mbox{~~and~~RAKE : uniformly lipschitz continuous satisfying @xmath14, shifted loss function @xmath17 introduced, integral operator @xmath117 satisfies @xmath242, standard gaussian rbf kernel @xmath63, reproducing kernel hilbert space @xmath0BERT : good estimators in additive, theorem quantilethm is better, advantages of additive models, many nice mathematical properties, additive models which helps

Text 4 (science):--------------------------------------------------Frequency : of the, in the, is the, and the, et alTF-IDF : et al, the tag, the leptonic, the st, leptonic decayGraph : of the, is the, in the, and the, the tagEntropy : @xcite @xmath156 gev@xmath157 @xmath158, @xmath122 @xmath123 @xmath124 @xmath125, @xmath121 @xmath122 @xmath123 @xmath124, @xmath123 @xmath124 @xmath125 @xmath126, @xmath156 gev@xmath157 @xmath158 mevRAKE : @xmath40 @xmath41 containing @xmath42 @xmath3 pairs, degree chebyshev polynomial background function, layer stereo wire drift chamber, gaussian signal function plus second, rich detector covers approximately @xmath52BERT : leptonic decay @xmath71 without, leptonic decay efficiency, leptonic decay efficiency hence, decays to lighter leptons, the leptonic decay efficiency

Text 5 (book):--------------------------------------------------Frequency : of the, in the, it was, and the, mr. uttersonTF-IDF : mr. utterson, the lawyer, of my, i was, it wasGraph : of the, in the, it was, and the, of aEntropy : dr. jekyll's mr. utterson, m.d. d.c.l. l.l.d. f.r.s., jekyll m.d. d.c.l. l.l.d., carew hastie lanyon henry, mr. enfield dr. jekyllRAKE : disordered sensual images running like, good qualities seemingly unimpaired, fast drawers stood open, circulation like mr. enfield, haired old woman openedBERT : dr. jekyll looking deathly, dr. jekyll 's disappearance, of henry jekyll dead, henry jekyll dead, of dr. jekyll's mr.

Text 6 (book):--------------------------------------------------Frequency : gregor 's, of the, he had, it was, in theTF-IDF : gregor 's, his sister, his father, he had, he wasGraph : gregor 's, of the, he had, it was, in theEntropy : mr. samsa let 's, tearfully thanking gregor 's, collar mr. samsa shouted, of excusal mr. samsa, mr. and mrs. samsaRAKE : grey earth mingled inseparably, electric street lamps shone, leaving vile brown flecks, mr. samsa twisted round, visit gregor relatively soonBERT : excessive sleepiness after sleeping, his room crawling over, pain in bed, bed into a horrible, excessive sleepinessOh, and in case you’re curious, the following are the texts we used! Were you able to guess correctly?

- A news article about Harry Potter store Daniel Radcliffe turning 18 and gaining access to a $41.1 million fortune.

- A news article with CNN correspondents going behind the scenes of a jail where inmates are mentally ill.

- Learning rates for the risk of kernel based quantile regression estimators in additive models (link)

- Improved Measurement of Absolute Branching Fraction of Ds to tau nu (link)

- The Strange Case of Dr. Jekyll and Mr. Hyde by Robert Louis Stevenson (link)

- Metamorphosis by Franz Kafka (link)